We use cookies to personalise content & improve our services. By using our site, you consent to our Cookie Policy. Read more

An ethical AI solution – utopian or realistic?

Who is responsible for the decisions made by artificial intelligence? What considerations will companies need to take before they start using AI? The AI Sustainability Center in Sweden identifies, measures and studies these questions and many other aspects of artificial intelligence ethics.

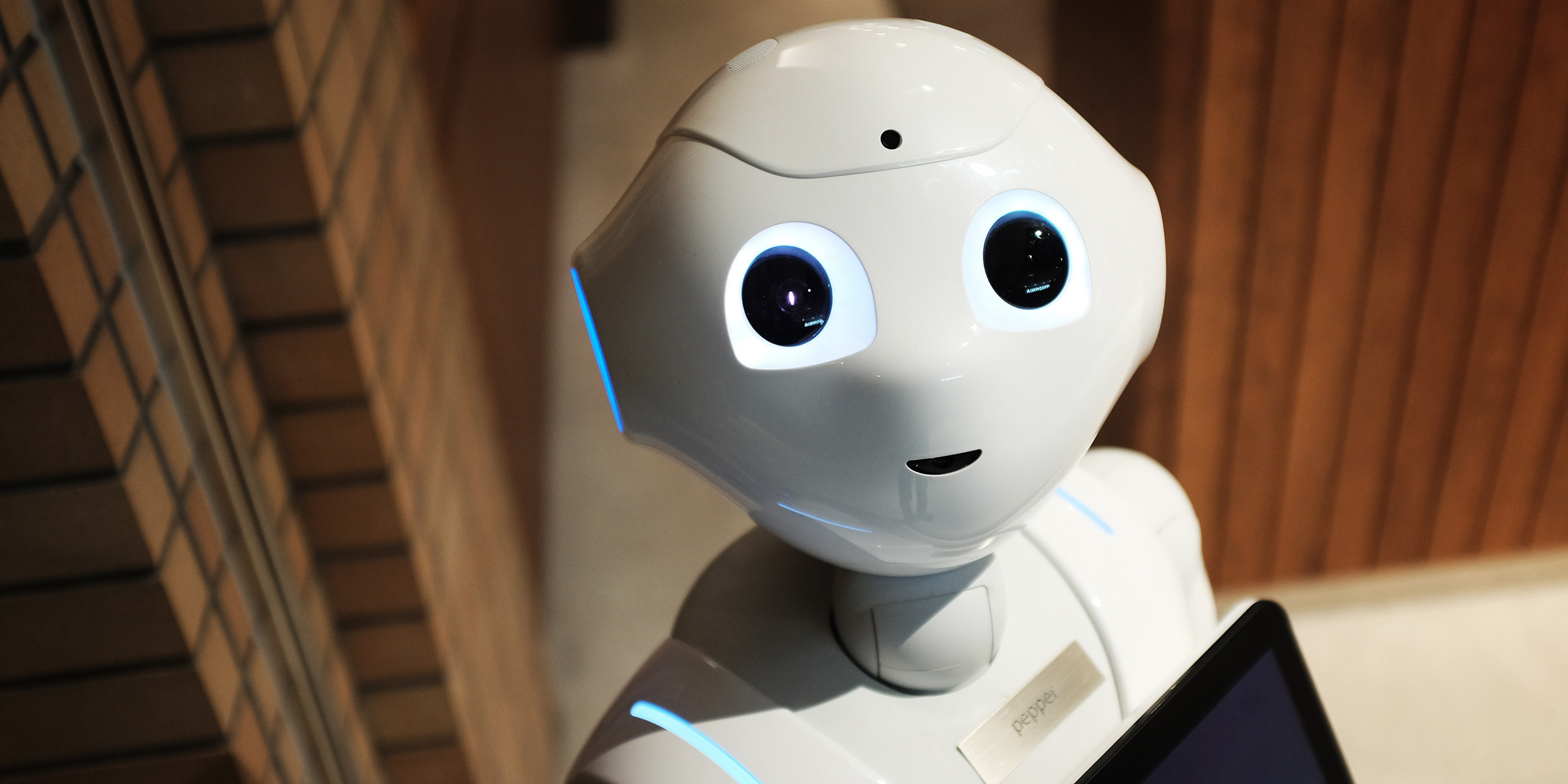

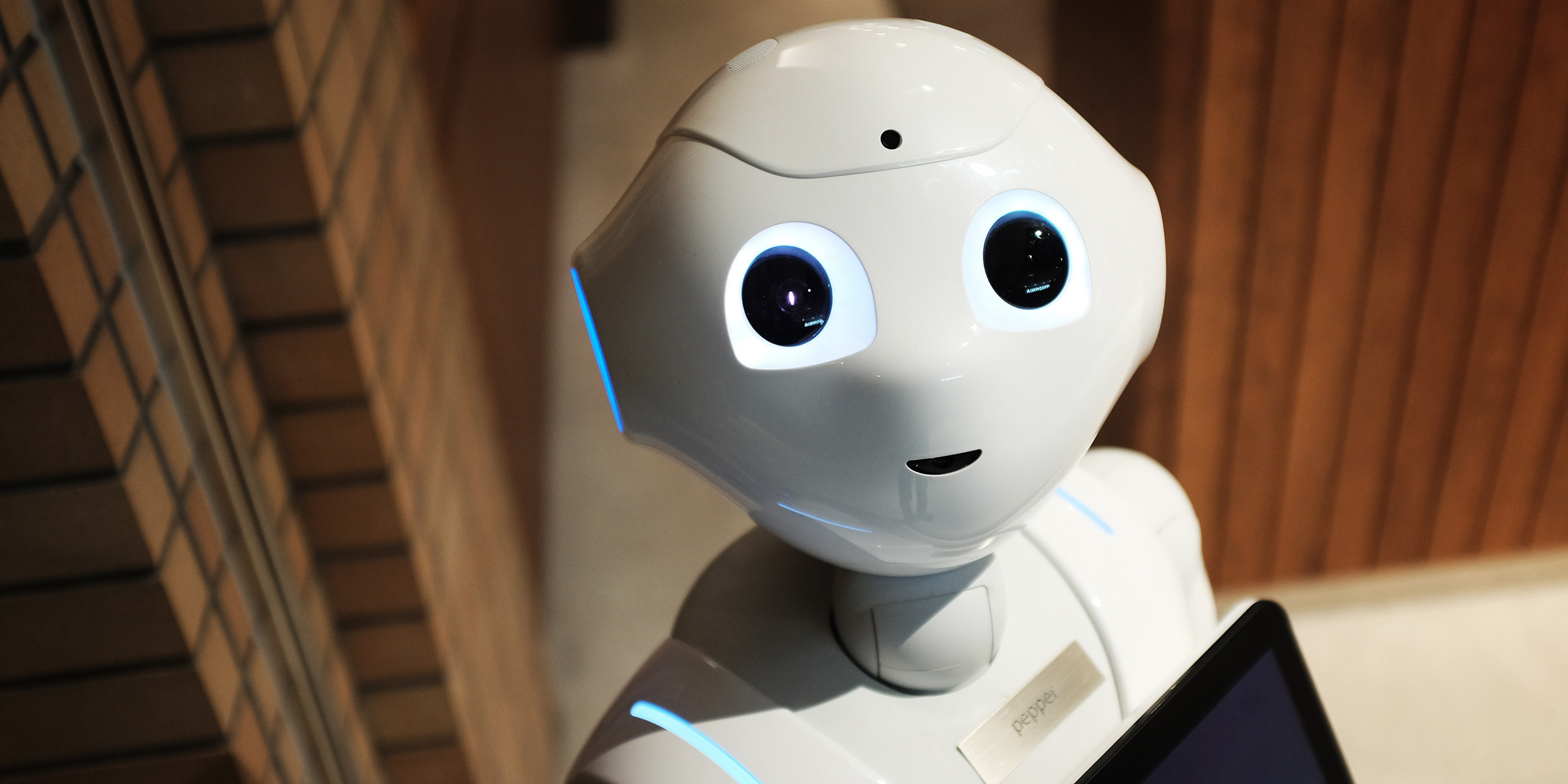

Most of us come into contact with artificial intelligence every day. In fact, the best artificial intelligence applications are the ones we do not even notice. However, in terms of ethics, it is important for people to know whether they are interacting with a human or a machine as well being assured that their recommendations are not based on bias in the data. Artificial intelligence has no morals, but the design team must have a precise understanding of the type of intelligence they are creating. Ethical artificial intelligence applications can be a source of competitive advantage, strengthening a company’s brand and increasing customers’ trust in the company’s products and services.

“Artificial intelligence gives us recommendations on things like what to buy, which route would get us to our destination fastest, and what information to enter into a search engine. The challenge of the future will be to make artificial intelligence applications that are more capable of taking social and human influences into consideration, in addition to profit and efficiency. Artificial intelligence will become a means for enhancing prosperity, which is why it is important for companies to have ethical principles, such as transparency and fairness, as well as legal instruments for complying with these instructions. This would enable us to prevent unintentional ethical pitfalls,” say Anna Felländer and Elaine Weidman Grunewald, co-founders of the AI Sustainability Center.

Artificial intelligence has no morals, but the design team must have a precise understanding of the type of intelligence they are creating.

“Artificial intelligence should create value for individuals, businesses and societies in such a way that it is led by humans and not machines. Artificial intelligence holds enormous potential. It will help to treat diseases and develop energy-efficient cities and concepts such as precision farming, which reduces the need for fertilisers, pesticides and water,” Felländer says. The concept AI Sustainability means identifying, measuring and governing how AI is scaled in a broader ethical and social context, this has not yet given enough attention, Felländer continues, and the consequences from not considering the broader aspects can be severe and costly.

All organisations need to be aware of how their use of data and AI is affecting society

Society is increasingly concerned about AI, and ensuring that it is used for the best of humanity. Several organizations are designing principles on ethical AI. Yet, this is not enough. There needs to be both a multidisciplinary approach and a practical framework for assessing, avoiding and governing risk associated to AI.

“The AI Sustainability center is offering organisations a practical framework and tools to avoid unintended pitfalls that could lead to privacy intrusion, discrimination and social exclusion,” says Weidman Grunewald. We believe there is a trust premium to be gained by achieving transparency, explainability and accountability in your AI applications. Governing AI in a sustainable way requires that ethical questions are put on the top of the agenda. For example, how far can we go in our collection of personal information for credit risk scoring? Is it ok when media recommend articles to a person with racial opinions supporting his or her orientation? Weidman Grunewald continues.

Can artificial intelligence be taught to make ethical choices?

“It is possible to teach artificial intelligence to make ethical choices. In such cases, algorithm favour aspects such as diversity and equality between the sexes. For example, when a company is recruiting a new CEO, it will not automatically hire a white Western male based on historic data. In itself, technology is neutral – we must ensure that it is used correctly,” Felländer concludes.